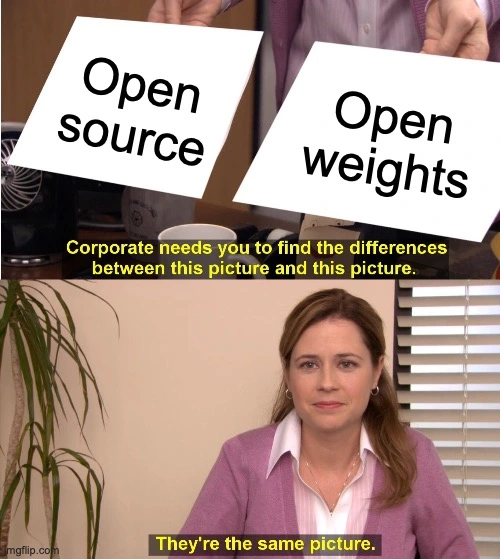

Whenever a new ML model with downloadable weights comes out, there’s a choir of people praising open source models. The Llamas, the Mistrals, the Phis, etc.. In all their greatness, they’re a galaxy away from open source. You can download the weights but that’s where the openness ends.

Training data? Here’s a vague description of records you can’t access. Training code? Some high level details and a mountain of secret tricks. Architecture? You can have it, since it isn’t so relevant nowadays.

I’m not going to talk about the various ways the companies release their models, or the motivation behind it. We’ll do it another time. Instead, we’ll work through what open source really means, and why you, dear reader, should think what open means to you.

Databases

Machine learning models are like databases. There’s some embedded information, you can query them, just that with models there’s only one kind of query you can make. If we put their components side by side, they would be something like:

- the training code => the database source code;

- the training data => the data that you put in the database;

- the architecture => a bit like the schema;

- and the final weights => your final executable;

and there’s some client code for querying the system.

The mapping is a bit leaky and not exactly 1-to-1. If you want to get nitpicky, the training data influences the training process itself, and is present in the final binary. Similarly, the architecture is present during training too.

Nevertheless, when you say open source database, you think about the source code, not the binary that you run yourself. Sure, having a binary (e.g. Llama weights) and not just a managed service behind an API (e.g. Claude or ChatGPT) is preferable in some circumstances. Still, no sane technical person would say that MS SQL Server or Oracle Database are open source just because you get to run them locally, vis-a-vis e.g. Postgres or SQLite.

But Seb! I can finetune my model if I have the weights but modifying executable binaries isn’t a thing (at least for practical customisation purposes). Your analogy doesn’t make sense!

I hear you loud and clear.

Modding games

If you don’t buy the database counterpart, think about games. Computer, not tabletop.

Machine learning models are like games:

- the training code => the game source code;

- the training data => the game assets (textures, text and audio);

- the architecture => the overall universe/setting of the game that contextualises above two;

- the weights => the game executable.

This is better. Much like for an ML model, your assets more directly affect your final executable (runtime loaded assets aside). You can view finetuning a bit like modding — on the closed end, you get to modify or add some assets; on the open end, you can manipulate the core mechanics.

Once again, when you say open source game, you think about the source code and the assets, not the binary. While modding is nice — who doesn’t like Thomas the Tank Engine replacing all dragons in Skyrim — you wouldn’t call The Elder Scrolls series open source. And they’re some of the most moddable games out there.

Intent matters

People care about open source because it allows them to inspect the software. In many cases, it allows them to modify it too — either upstream or their own fork. Many doesn’t mean all. Not all open source projects are open contribution. Not all licences permit modifying the code either.

The value is in inspecting how things are done, and sometimes modifying such behaviour.

The equivalent would be modifying the training data and the code.

Not finetuning stable diffusion on files from your

To top it all, just because you can download the weights, and even finetune a model, doesn’t mean that you can do whatever you want with it. The licence still applies; whether the (non-)commercial aspect of it, or the purpose of your resulting model.

It’s a spectrum

Open source models have been the de facto modus operandi for a lot of research for years. ResNets, BERT, and various GANs, to name but a few, were all open. And there are lots of new open models across the whole field. On the contrary, we’ve always had pure product models. I don’t expect YouTube or Amazon to show me their recommender system; or ChatGPT & friends for that matter.

It’s with the advent of massive generative models, we’ve started getting these quasi-research, partially open models. Don’t get me wrong, open weights are great, and I think they’re a sizable step forward towards commoditising generative machine learning. Just don’t call them open source.

Some food for thought to wrap this up: if the weights aren’t the moat, what is?